Top Data Engineering Tools in 2025

Every modern business today is data-driven. In 2024, 97.2% of organizations are investing in big data and AI to drive their business outcomes, according to a recent survey by McKinsey.

The global datasphere is expected to reach 175 zettabytes soon, growing to 491 zettabytes by 2027, making data engineering increasingly important. Efficient data engineering ensures businesses can manage massive datasets and glean actionable insights. Organizations may face data bottlenecks without strong data engineering practices, leading to slower decision-making and missed opportunities.

Choosing the appropriate data engineering tool is essential for enhancing efficiency, scalability, and performance, whether you run a small business or a large corporation.

This guide explores the top data engineering tools that will dominate the industry in 2025.

Introduction to Data Engineering

Data engineering is the backbone of modern data processing. It involves designing and building systems that gather, store, and process data for analysis. As organizations increasingly rely on data for decision-making, data engineers play a pivotal role in creating data pipelines, maintaining databases, and ensuring the availability of high-quality data for analysis.

Why is Data Engineering Important?

In the era of big data, efficient data engineering ensures that organizations can harness the full potential of their data. Businesses can make informed decisions faster by automating data workflows, integrating disparate data sources, and providing data accuracy.

Reenbit’s data engineering services can help quickly assess your unique needs and identify the best tools for your data pipeline. Our team of experts ensures the highest data quality, timeliness, and reliability throughout the process.

Reach out to us today to fast-track the development of your data pipeline with the most effective solutions!

Key Features of Data Engineering Tools

When choosing data engineering tools, several key features should be considered:

- Scalability: Can the tool handle large amounts of data without performance degradation?

- Integration capabilities: Does it integrate seamlessly with other data platforms, databases, and APIs?

- Ease of use: How user-friendly is the tool for data engineers and analysts?

These features are critical in ensuring the tool can handle the complex demands of modern data environments.

Before making a choice, it’s essential to answer key questions to help guide the decision-making process.

What problem will it solve?

The primary question when selecting a data engineering tool is identifying the specific problem it will address.

How costly?

Cost is another key factor to consider. Think of the total cost of ownership—licensing fees for vendor solutions versus the resources needed to maintain and support open-source tools.

Can we build it?

Building a custom tool is an option, but it’s essential to consider the required resources. Custom-built solutions may allow you to tailor the tool to your needs, but consider the developer hours needed to build, test, and maintain the system.

How can we measure if the tool fits the bill?

After implementing a tool, measuring its effectiveness is essential to ensure it’s genuinely solving the problem

Types of Data Engineering Tools

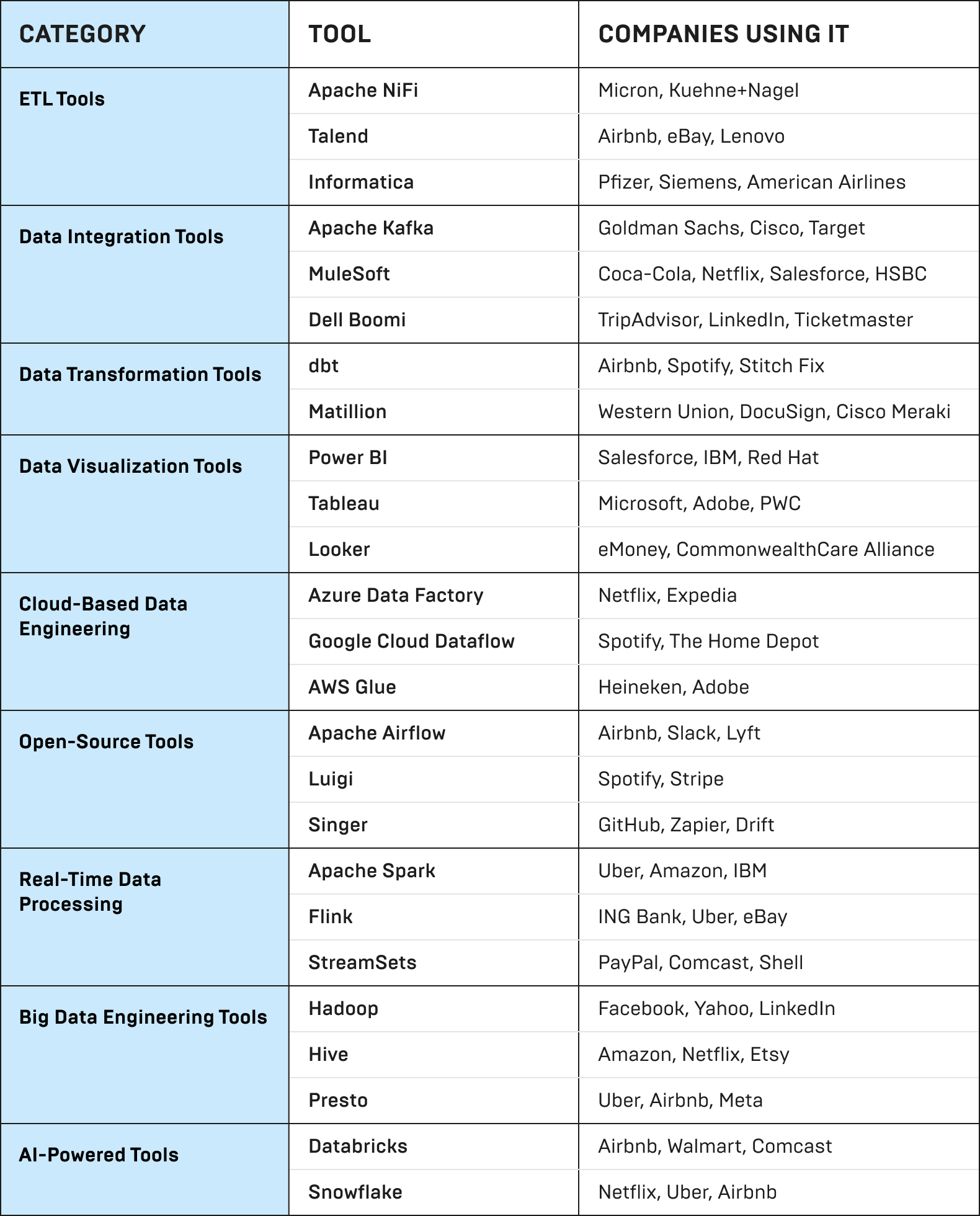

Data engineering tools can be categorized into various types based on their functions:

- ETL (Extract, Transform, Load) tools help extract data from different sources, transform it into the desired structure, and load it into a data warehouse.

- Data integration platforms enable the seamless integration of data from different systems.

- Data visualization tools allow analysts to create intuitive dashboards and reports for decision-makers.

Each tool serves a unique purpose, but they often work together in data workflows to improve efficiency and decision-making.

Top ETL Tools for Data Engineers

ETL tools are essential in transforming raw data into a structured format that is easy to analyze. Some of the best ETL tools available for data engineers in 2025 include:

Azure Data Factory a Microsoft cloud service, enables building workflows for extensive data transformation and transfer. It comprises a series of interconnected systems. Together, these systems allow engineers to not only ingest and transform data but also design, schedule, and monitor data pipelines. A wide array of connectors, such as those for MySQL, AWS, MongoDB, Salesforce, and SAP, is the key to Data Factory’s strength. Its flexibility is another reason it is lauded.

Companies using it: Heineken, Adobe

Apache NiFi offers an intuitive interface for automating data flows between systems. Its flexibility in handling both batch and real-time data makes it a popular choice for complex data workflows.

Companies using it: Micron, Kuehne+Nagel

Talend is a robust, open-source ETL tool that supports many integrations. It provides a comprehensive suite for data integration, transformation, and cleansing.

Companies using it: Airbnb, eBay, Lenovo

Informatica is a leader in the ETL space, offering cloud and on-premise data integration and governance solutions. Its platform helps businesses manage large-scale data transformations efficiently.

Companies using it: Pfizer, Siemens, American Airlines

Best Data Integration Tools

Data integration tools are designed to combine data from multiple sources into a unified view, helping organizations make better decisions. Some of the top data integration tools include:

Apache Kafka is a distributed event streaming platform allowing real-time data integration. It is widely used for building real-time data pipelines and streaming applications.

Companies using it: Goldman Sachs, Cisco, Target

MuleSoft provides a powerful integration platform as a service (iPaaS) that connects applications, data, and devices. It’s ideal for creating hybrid integration solutions.

Companies using it: Coca-Cola, Netflix, Salesforce, HSBC

Dell Boomi is a cloud-based data integration tool that enables organizations to connect data across various platforms quickly and securely.

Companies using it: TripAdvisor, Linkedln, Ticketmaster

Data Transformation Tools

Transforming raw data into actionable insights requires specialized tools. Some of the best data transformation tools include:

dbt (Data Build Tool) is an open-source tool that allows data engineers to transform raw data into well-structured data sets that are easy to query. Its SQL-based approach makes it easy to integrate into existing data pipelines.

Companies using it: Airbnb, Spotify, Stitch Fix

Matillion is a cloud-based data transformation tool that integrates with various cloud data warehouses. It provides a drag-and-drop interface that simplifies the transformation process.

Companies using it: Western Union, DocuSign, Cisco Meraki

Data Visualization and Reporting Tools

Data visualization tools are essential for turning complex data into easy-to-understand charts, graphs, and dashboards. Some of the top tools in this category include:

Power BI , by Microsoft, is a robust tool for creating real-time dashboards and reports. It integrates well with other Microsoft products, making it a top choice for businesses using Microsoft technologies.

Companies using it: Microsoft, Adobe, PWC

Tableau is one of the most popular data visualization tools in the market. It provides powerful features for creating interactive dashboards and reports, making it easier for decision-makers to analyze data.

Companies using it: Salesforce, IBM, Red Hat

Looker is a cloud-based data analytics and business intelligence tool. It helps businesses create custom dashboards and explore data more interactively.

Companies using it: emoney, Commonwealth Care Alliance

Cloud-Based Data Engineering Platforms

Cloud-based data engineering tools are essential for businesses that want to securely scale their operations and store large volumes of data. Some of the leading platforms include:

Azure Data Factory is a cloud-based data integration service from Microsoft. It helps businesses create pipelines that move and transform data across various platforms.

Companies using it: Heineken, Adobe

AWS Glue is a fully managed ETL service provided by Amazon Web Services. It makes it easy for users to prepare and load data for analytics without worrying about infrastructure management.

Companies using it: Netflix, Expedia

Google Cloud Dataflow is a fully managed stream and batch data processing service. It allows data engineers to build pipelines that process data in real-time.

Companies using it: Spotify, The Home Depot

Open-Source Data Engineering Tools

Many organizations favor open-source data engineering tools because of their flexibility and cost-effectiveness. Some notable open-source tools include:

Apache Airflow is a platform used to schedule and monitor workflows. It is highly flexible and integrates well with other tools in the data engineering ecosystem.

Companies using it: Airbnb, Slack, Lyft

Luigi developed by Spotify, is a Python-based package that helps data engineers build complex pipelines. It is beneficial for batch data processing.

Companies using it: Spotify, Stripe

Singer is an open-source framework that allows for building data pipelines. It focuses on integration, making connecting various data sources and sinks easier.

Companies using it: GitHub, Zapier, Drift

Real-Time Data Processing Tools

Real-time data processing is becoming increasingly important as businesses seek faster decisions. Some of the best tools for real-time data processing include:

Apache Spark is an open-source unified analytics engine for big data processing. It is known for its speed and ease of use, making it ideal for real-time data processing.

Companies using it: Uber, Amazon, IBM

Flink is another open-source platform for distributed stream processing. It excels in processing high-throughput, low-latency data streams.

Companies using it: ING Bank, Uber, eBay

StreamSets provides a real-time data operations platform for designing, executing, and monitoring data pipelines.

Companies using it: PayPal, Comcast, Shell

AI-Powered Data Engineering Tools

Artificial Intelligence (AI) is entering data engineering, offering enhanced automation and decision-making capabilities. Some of the best AI-powered tools include:

Databricks is a unified analytics platform that leverages AI and machine learning to streamline data engineering workflows. It integrates with Apache Spark for high-performance processing.

Companies using it: Airbnb, Walmart, Comcast

Snowflake is a cloud-based data warehousing platform that uses AI to optimize query performance and data storage. It is a powerful tool for modern data engineering.

Companies using it: Netflix, Uber, Airbnb

Tools for Big Data Engineering

Big data tools help manage and analyze massive data sets. Some of the best tools for big data engineering include:

Hadoop is a well-established framework for storing and processing large data sets in a distributed environment. It remains one of the most widely used tools in big data engineering.

Companies using it: Facebook, Yahoo, LinkedIn

Hive is a data warehouse software built on top of Hadoop. It enables easy querying and management of large datasets using a SQL-like language.

Companies using it: Amazon, Netflix, Etsy

Presto is an open-source distributed SQL query engine that allows data engineers to query data from different data sources on scale.

Companies using it: Uber, Airbnb, Meta (formerly Facebook)

Security and Compliance in Data Tools

Data governance and security are paramount in data engineering. Data engineers must select tools that provide built-in security features and adhere to industry standards to ensure compliance.

Top Programming Languages for Data Engineering

From crafting captivating user interfaces to developing compelling branding materials, this comprehensive suite of design tools is tailored to meet the unique needs of startups.

Python

Python has become a cornerstone in data engineering thanks to its versatility and rich ecosystem of libraries like Pandas, NumPy, and PySpark. Its simplicity allows engineers to seamlessly build and automate ETL (Extract, Transform, Load) processes, analyze data, and integrate machine learning models.

SQL

No data engineering toolkit is complete without SQL. It remains the go-to language for querying and managing relational databases, enabling professionals to handle structured data effectively. Databases like PostgreSQL, Snowflake, and MySQL rely heavily on SQL for data modeling and querying large-scale datasets.

Java

Java is renowned for its scalability and performance, making it ideal for handling distributed data systems. Frameworks like Apache Hadoop and Apache Kafka utilize Java extensively, enabling real-time data pipelines and processing systems.

Scala

Often associated with big data, Scala is preferred for building scalable data pipelines, particularly in conjunction with Apache Spark. Its integration with Java Virtual Machine (JVM) makes it highly performant for stream processing and batch analytics.

Golang

Golang is gaining traction in data engineering due to its efficiency in handling concurrent tasks. It’s commonly used to build high-performance data pipelines and real-time data streaming applications.

R

Although traditionally a statistical programming language, R has carved out its niche in data engineering. It excels in data visualization, statistical analysis, and exploratory data tasks, supported by popular libraries like ggplot2 and dplyr.

The choice of programming language in data engineering depends on factors such as the specific use case, the scale of data, and the existing tech stack. For instance, Python and SQL dominate general data workflows, while Java and Scala are indispensable for big data environments like Spark and Hadoop.

Conclusion

Success in data engineering depends on choosing the right tools to streamline data processing, integration, and transformation. The right toolset can greatly enhance efficiency and empower better decision-making, from ETL solutions to data visualization platforms and cloud-based technologies.

Reach out to Reenbit experts for advice on selecting the best data engineering tools and services to meet your unique needs.

By harnessing the potential of top data engineering tools, businesses can stay competitive in 2024, transforming raw data into actionable insights that fuel growth and innovation.

FAQ

What are data engineering tools?

Data engineering tools are software platforms designed to help businesses efficiently collect, process, and integrate large volumes of data. These tools are critical for building scalable data pipelines and ensuring high-quality data is readily available for analysis.

How do data engineering tools benefit businesses?

By automating data collection, transformation, and integration, these tools simplify data workflows, enable faster and more informed decision-making, and enhance overall business operations with accurate insights.

Which ETL tools are best for small businesses?

Azure Data Factory , Talend and Matillion are excellent choices for small businesses. They offer affordability, intuitive interfaces, and scalability, making them accessible and practical for smaller teams.

What is the future of data engineering?

The future of data engineering lies in adopting AI-driven and automated tools that can handle complex data workflows in real time. With the increasing shift to cloud-based solutions, businesses will gain access to even more scalable, efficient, and cost-effective data processing capabilities.